Once I learned how to “train” the Stable Diffusion software with hand-picked photos of my kids, I was able to create a funny little meme of Kyra. I’ve since learned a bit more about LoRAs, which are “low-rank adaptation” models used to add a specific person, object, or art style to an existing training set. In other words, I can gather twenty or thirty photos of Kyra, run them through some high-powered GPU-based processing, and incorporate them into my AI toolset as I create imagery.

For example, here’s what the AI came up with when I asked for a photo of Kyra in the winter.

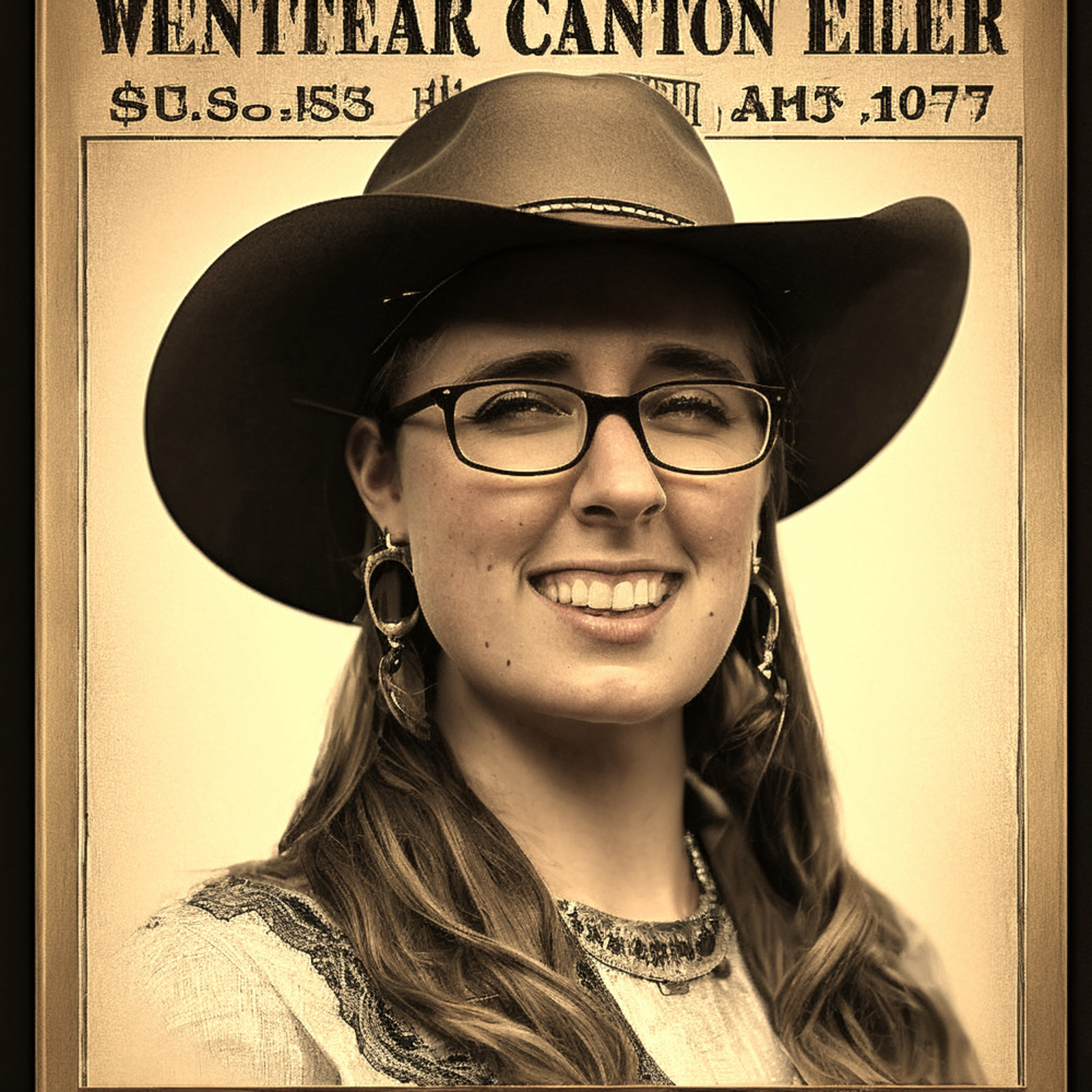

Or, what she might look like wearing a cowboy hat in an “Old West style photograph”.

Obviously these aren’t quite Kyra, but they’re close enough to pass a cursory inspection. They’re generated completely from whole cloth: the clothing, background, and everything else is dreamed up by nothing more than clever software. It accurately captures her hair color and style, recognizes that she’s often photographed wearing dangling earrings, matches the style of her glasses, and even includes the dimple on her right cheek. Whoa.

After a hearty online game of Bang with all three kids today, I prompted the AI for an “Old West style wanted poster” of Kyra (to be used in a meme, naturally).

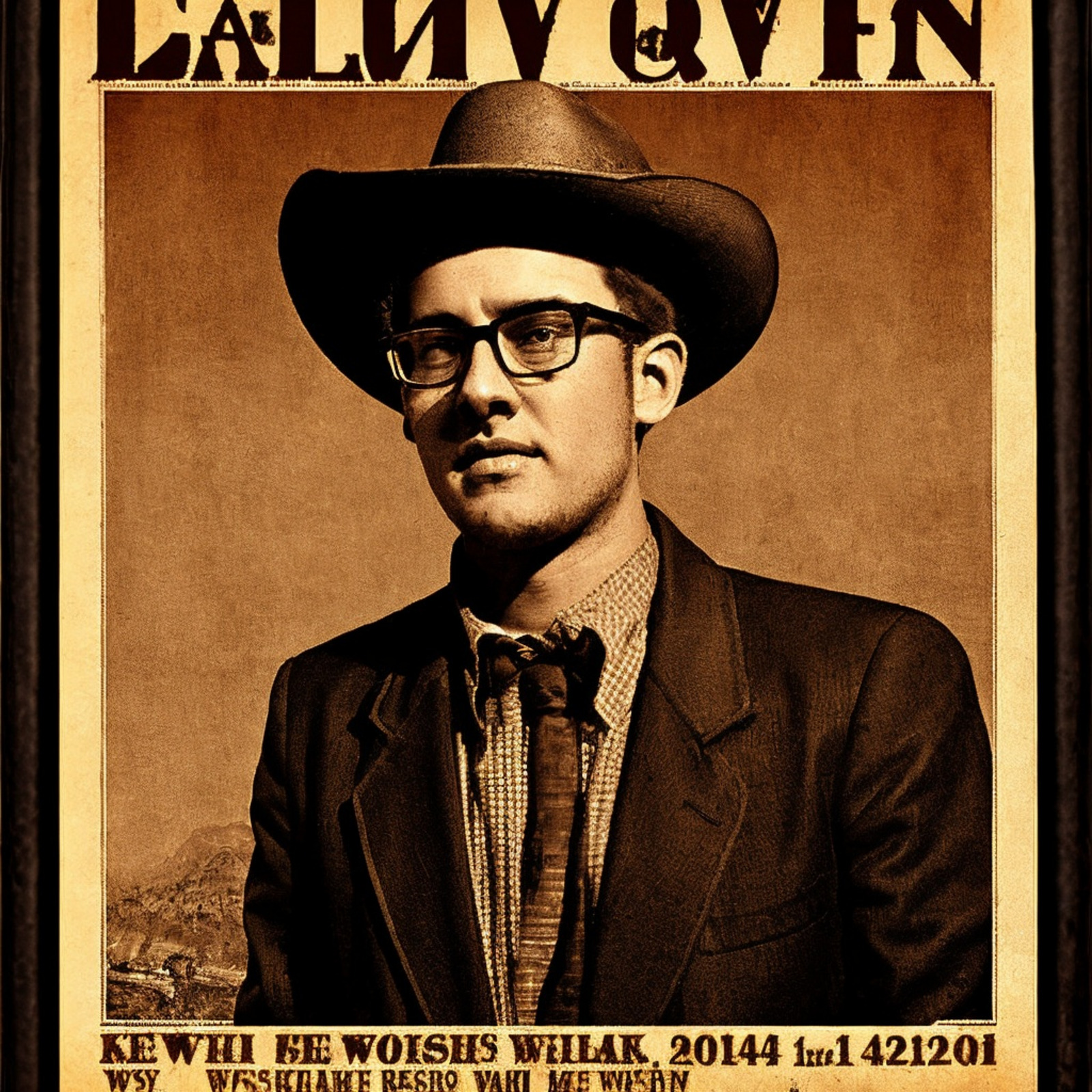

As usual, the AI struggles with text, but again it’s a stunning likeness of my girl. Zack wondered what he would look like, and I obliged:

This one captures the essence of Zack, but I feel like it’s not quite as accurate as Kyra’s results. I suspect I need to refine my training methodology a bit, and continue learning how to write better prompts. So there’s still some work to be done, but the humor value of some of these is pretty high. And it’s a good reminder of the incredible power of AI-generated imagery…